Overview

SWE-bench tests AI systems' ability to solve GitHub issues.

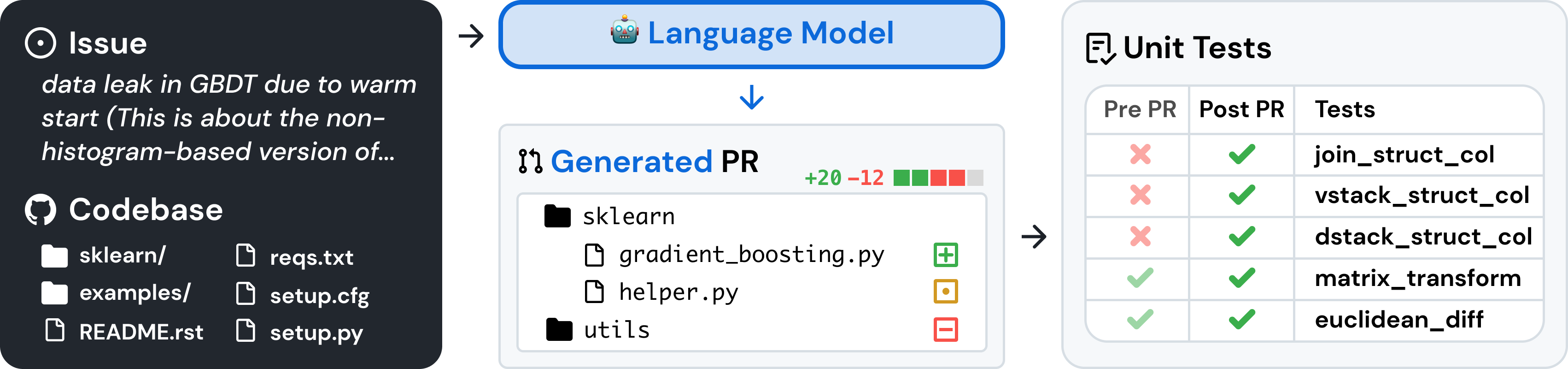

We collect 2,294 task instances by crawling Pull Requests and Issues from 12 popular Python repositories. Each instance is based on a pull request that (1) is associated with an issue, and (2) modified 1+ testing related files.

Per instance, we construct an execution environment (Docker Image) with the repository successfully installed at the commit that the Pull Request is based on. Without the Pull Request's changes, a number of test(s) fail. After the Pull Request is merged, the same set of test(s) pass. These "Fail-to-Pass" tests are the primary signal for evaluation.

SWE-bench evaluation works as follows. Per task instance, an AI system is given the issue text. The AI system should then modify the codebase in order to resolve the described issues. When the AI system is finished, we run the aforementioned Fail-to-Pass tests to check if the issue was successfully resolved.

SWE-bench was released in October 2023, where our initial Retrieval Augmented Generation (RAG) baseline scored just 1.96%. Our follow up work, SWE-agent, was the first agent-based AI system ever introduced for performing software engineering tasks, achieving a score of 12.47% on SWE-bench. You can train your own agentic software engineering models using our SWE-smith dataset.

Resources

In the original SWE-bench work, we fine-tuned CodeLlama (Rozière et al. 2023) to directly generate patches given 1+ files along with the issue text. We provide all assets, including the training data and model weights, for the SWE-Llama models.

Base and pre-processed datasets (Oracle, 13K, 27K, 40K, 50K Llama) are available on HuggingFace.

SWE-Llama model weights:

Citation

If you use SWE-bench in your research, please cite our paper:

@inproceedings{

jimenez2024swebench,

title={{SWE}-bench: Can Language Models Resolve Real-world Github Issues?},

author={Carlos E Jimenez and John Yang and Alexander Wettig and Shunyu Yao and Kexin Pei and Ofir Press and Karthik R Narasimhan},

booktitle={The Twelfth International Conference on Learning Representations},

year={2024},

url={https://openreview.net/forum?id=VTF8yNQM66}

}

Jimenez, C. E., Yang, J., Wettig, A., Yao, S., Pei, K., Press, O., & Narasimhan, K. R. (2024). SWE-bench: Can Language Models Resolve Real-world Github Issues? In The Twelfth International Conference on Learning Representations. https://openreview.net/forum?id=VTF8yNQM66

Jimenez, Carlos E., et al. "SWE-bench: Can Language Models Resolve Real-world Github Issues?" The Twelfth International Conference on Learning Representations, 2024.